“Large Language Models” (LLMs) – have quickly become as familiar as our morning coffee.

They have revolutionized the world since their inception, and best of all, they’re free!

Except, they’re not.

LLMs like ChatGPT may be marvels of technology, but they come with a hefty hidden price tag – their voracious appetite for energy.

Energy hogs

That cup of coffee you’re drinking? That requires about .5 kilowatt-hour (KWh).

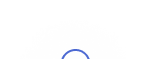

ChatGPTs daily energy consumption? Approximately 1 gigawatt-hour (GWh), which is equivalent to the daily energy consumption of 12 million US households.

To repeat, ChatGPT consumes the same energy as 12 million US households.

That’s a lot of cups of coffee! And we’re still at the stage of relatively early adoption with LLMs.

Vast data centers are already required to handle these processing requirements, with demand only set to increase.

Water ’bout that

And it’s not just energy that is being used. LLM’s might soon be competing for our morning coffee.

With massive computational models, comes hefty heaving computers, that all have pretty incredible cooling.

In fact, ChatGPT guzzles 500ml of water for every 5 to 50 queries it answers, putting huge strain on rivers in Iowa where its Azure supercomputer is located. Currently, ChatGPT receives 10 million daily prompts – that’s 5 million liters.

In training GPT-3 alone, Microsoft may have consumed an incredible 700,000 liters of water, enough to produce 370 BMW cars or 320 Tesla electric vehicles.

With GPT-4 and future models offering more and more sophistication, water consumption isn’t going away anytime soon.

A load of hot air

Longer-term environmental impacts? LLMs come with those too.

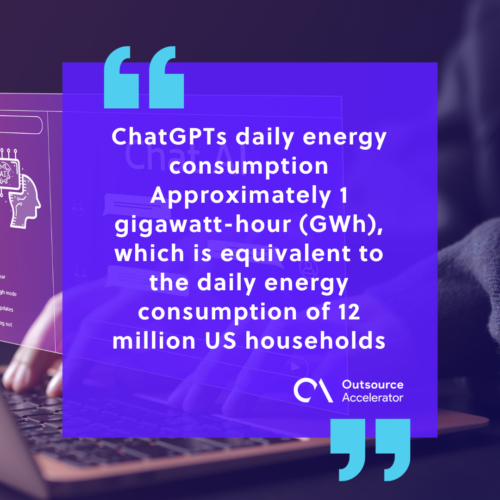

BLOOM calculated that training their LLM led to 25,000 tons of carbon emissions, equivalent to around 60 flights between London and New York.

Companies employing these models must find ways to offset their carbon footprint with sustainable practices.

Quick wins? Try setting carbon reduction targets, or using ESG software to help measure and reduce your environmental impact.

The financial toll

Aside from the environmental burden, there is the bottom-line impact.

Data centers require substantial investments in both infrastructure and cooling systems to prevent overheating.

And the electricity bills for running them? Astronomical.

Running LLMs has a very real-world cost, and this will eventually cost businesses dearly.

Humans not so bad

The prospects of ChatGPT might look amazing when free. However, it is actually really resource-intensive, even though OpenAI is picking up the bill for this – for now.

When comparing apples with apples, maybe the good old human workforce looks increasingly affordable, environmentally friendly, and efficient in comparison.

The question for your business

Is your business replacing humans with AI yet?

Independent

Independent